All-In-One-Deflicker

AU Kwan Wo

Introduction

All-In-One-Deflicker is a general postprocessing framework that can remove different types of flickers from various videos, including videos from video capturing, processing, and generation.

Setting up

A setup for the model is provided in a Google Colab notebook as a demonstration. Follow the instructions to create the environment for the tool to work. You can also set it up locally for faster runtime. Note that Linux is required to run the model with conda installed for setting up and managing environments. Details can be found under the Github page: https://github.com/ChenyangLEI/All-In-One-Deflicker

Prompt

To use the deflicker directly, you would only need to input the media you want to process, which can either be a video file or a folder with images (as 'frames' of a video). Nevertheless, if the result is unsatisfactory, some hyperparameters could also be manually modified in src/config/config_flow_100.json if you are cloning to the local machine. Otherwise, you can directly change the values in the input box provided if you are using colab notebook. The following are descriptions of some of the important hyperparameters.

- Iteration numbers: you can increase the iteration numbers to increase the number of times processing the video. (It is recommended to keep iteration numbers low in colab notebook for reasonable processing time)

- Optical flow loss weight: you can tune the optical_flow_coeff according to the intensity of flicker in your video

- Alpha flow factor: this parameter is only used when you are processing videos with segmentation masks. You can lower the alpha_flow_factor for videos with minor flickering

- Maximum number of frames: maximum_number_of_frames could be adjusted to limit the number of frames in the output. Please note that it is recommended to split long videos into several shorter sequences as performance for longer videos is yet to be evaluated.

Alternatively, you can also process videos with segmentation masks using Carvekit to further improve the atlas by removing the background of the videos, especially for videos with salient objects or humans. This implementation currently supports only one foreground object with a background. Note that using segmentation masks can significantly increase the process time and it is suggested to also increase the number of iterations with segmentation masks on.

Demonstrations

All-In-One-Deflicker can process a variety of videos. Some demonstrations would be shown below for your reference. (videos are generated with pre-trained weights downloaded from Github page)

film grains:

original

result

old cartoons:

original

result

video shooting on computer screen:

original

result

hyperparameters

Hyperparamters adjusting on sample video:

original

default hyperparamter

iteration_number: 15000

optical_flow_coeff: 5

segmentation mask

As mentioned on github page, the usage of segmentation mask has a limited impact on the final quality. On the other hand, it is worth mentioning lowering optical_flow_coeff from 500 to 5 does not result in a significant downgrade albeit seemingly quite a flickering video. Below shows a detailed comparison:

The result on the right is processed with segmentation mask while that on the left is processed with default hyperparameters without segmentation mask. It is observed that with segmentation mask on, the color has more constrasts and the patterns of the man’s scarf and shirt also appear sharper.

Out of curiosity, I have tried applying the tool to a more extreme sample to see the effects. Normally, deflickering this sample on software by hand would require a large amount of work due to the rapid change of colours from the light. Below is the result processed with different hyperparameters.

limit testing:

original

result

default

result

optical_flow_coeff: 50000000

sample_batch: 4

Comparison between default output and hyperparamter adjusted output:

In the result, the video on the left is the default output while that on the right is the adjusted output. The first observed effect is the darkened color scheme of the video on the right. The effect is generally shown in the reflection of the light on the ground with sharper edges. Using segmentation mask with very high 'alphe_flow_factor' and 'iteration_number' resulted in a similar result. Therefore, adjusting the hyperparameters will not deviate from the default output much.

Surprisingly, a better result could be

observed in concat.mp4 generated by the model under the neural_filter folder instead:

As shown in the figure, the image in the middle has no white region. It is quite stunning to see the video in the middle has flickering issues completely eliminated despite the lowered resolution. In extreme cases like this,

one can also consider checking the concat.mp4 in search of an optimal result.

As shown in the figure, the image in the middle has no white region. It is quite stunning to see the video in the middle has flickering issues completely eliminated despite the lowered resolution. In extreme cases like this,

one can also consider checking the concat.mp4 in search of an optimal result.

Significance

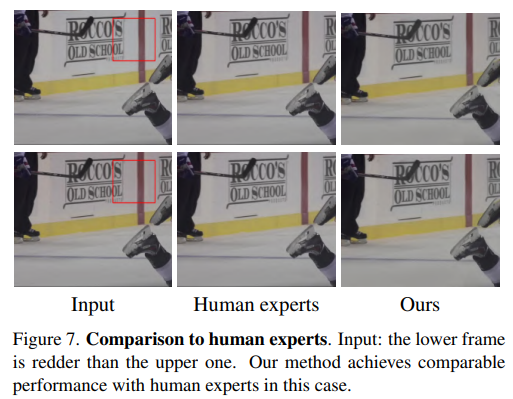

At the moment, one common approach to handle deflickering issues is by recruiting human experts to manually process videos using commercial software. Below is an image from the research paper showing a comparison between human experts and All-In-One-Deflicker by the researchers.

With similar results, All-In-One-Deflicker can process a larger amount of data at a significantly lower cost. Deflickering on videos manually has always been time consuming and inconvenient, and All-One-In-Deflicker shows automation in such field is ready to be introduced.

Besides, prior work usually requires specific information of input such as flickering frequency, manual annotations, or extra consistent videos to remove the flicker. For this tool, no extra guidance is required to process the video. Due to this 'blind flickering' approach, it can have wide applications.

Conclusion

Flicking in video shooting is a very common problem that occurs when frame rate and shutter speed do not match the capture of fractions of light pulses, but All-In-One-Deflicker can make the processing automated and convenient. This tutorial introduced it as a tool for you to process your own videos without needing to manually adjust effects on commercial software. It would be nice if the tutorial could help you understand how to make use of this tool.

reference

Lei, C., Ren, X., Zhang, Z., & Chen, Q. (2023, March 14). Blind video deflickering by neural filtering with a flawed Atlas. arXiv.org. https://arxiv.org/abs/2303.08120